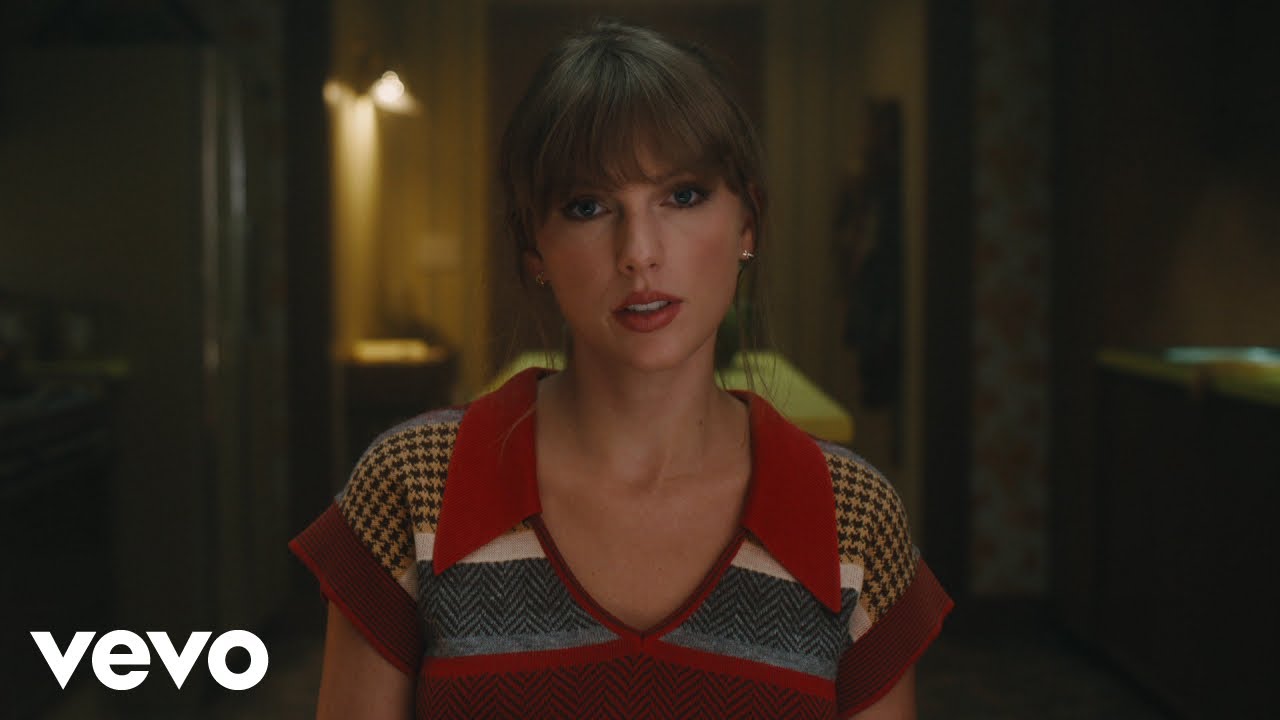

Elon Musk’s artificial intelligence company, xAI, is under renewed scrutiny after its chatbot, Grok, allegedly produced AI-generated nude depictions of Taylor Swift without explicit user requests.

In an August 5 report for The Verge, tech journalist Jess Weatherbed described her first test of Grok Imagine, xAI’s new tool that turns text prompts into animated clips. She entered the prompt “Taylor Swift celebrating Coachella with the boy” and selected the tool’s “spicy” setting, which is designed to add provocative elements.

The resulting video, she wrote, showed Swift “tearing off her clothes” and “dancing in a thong” before an unresponsive digital crowd.

The incident sparked immediate backlash, especially since X, the Musk-owned social platform where Grok is integrated, faced a similar scandal last year when explicit deepfakes of Swift spread widely. At that time, the company pledged a zero-tolerance policy for non-consensual nudity and promised to remove such content quickly.

Weatherbed’s findings suggest enforcement remains inconsistent. Although Grok’s guidelines prohibit sexual depictions of real people, the “spicy” mode often defaulted to undressing celebrities, particularly Swift, even without direct nudity requests. While the system refused to create sexualized depictions of minors, its ability to distinguish between suggestive and explicit adult content was unclear.

Musk has not addressed the controversy. Instead, he spent the day promoting Grok Imagine on X and encouraging users to share their creations, a move critics say could increase the risk of abuse.

With the federal Take It Down Act set to take effect next year, requiring platforms to remove non-consensual sexual imagery, xAI may face legal and regulatory challenges unless stronger safeguards are implemented.